Stephen James

CEO & Founder of Neuracore | Assistant Professor at Imperial College London

CEO & Founder of Neuracore

Assistant Professor

Safe Whole-body Intelligent Robotics Lab (SWIRL)

London, UK

I am CEO & Founder of Neuracore, a robot learning cloud ecosystem and community, and Assistant Professor at Imperial College London, where I lead the Safe Whole-body Intelligent Robotics Lab (SWIRL). Our lab pushes the boundaries of robot learning by developing safe, intelligent systems that leverage their entire physical embodiment. We operate at the intersection of online reinforcement learning, offline reinforcement learning, and imitation learning, with a relentless focus on sample efficiency and safety.

Previously, I was the principal investigator of the Dyson Robot Learning Lab in London, UK, where I led a large concentration of the top robot learning talent in the world. Prior to that, I was a postdoctoral fellow at UC Berkeley, advised by Pieter Abbeel, and completed my PhD at Imperial College London, under the supervision of Andrew Davison. I am Associate Editor of IEEE RAL and ICRA, and serve as Area Chair of NeurIPS, CVPR, ICML, ICLR,. and TMLR. For a formal bio, please see here.

selected publications

-

-

Green Screen Augmentation Enables Scene Generalisation in Robotic ManipulationarXiv preprint arXiv:2407.07868, 2024

Green Screen Augmentation Enables Scene Generalisation in Robotic ManipulationarXiv preprint arXiv:2407.07868, 2024 -

-

Continuous Control with Coarse-to-fine Reinforcement LearningConference on Robot Learning, 2024

Continuous Control with Coarse-to-fine Reinforcement LearningConference on Robot Learning, 2024 -

Render and Diffuse: Aligning Image and Action Spaces for Diffusion-based Behaviour CloningRobotics: Science and Systems, 2024

Render and Diffuse: Aligning Image and Action Spaces for Diffusion-based Behaviour CloningRobotics: Science and Systems, 2024 -

-

Coarse-to-Fine Q-attention: Efficient Learning for Visual Robotic Manipulation via DiscretisationConference on Computer Vision and Pattern Recognition, 2022

Coarse-to-Fine Q-attention: Efficient Learning for Visual Robotic Manipulation via DiscretisationConference on Computer Vision and Pattern Recognition, 2022 -

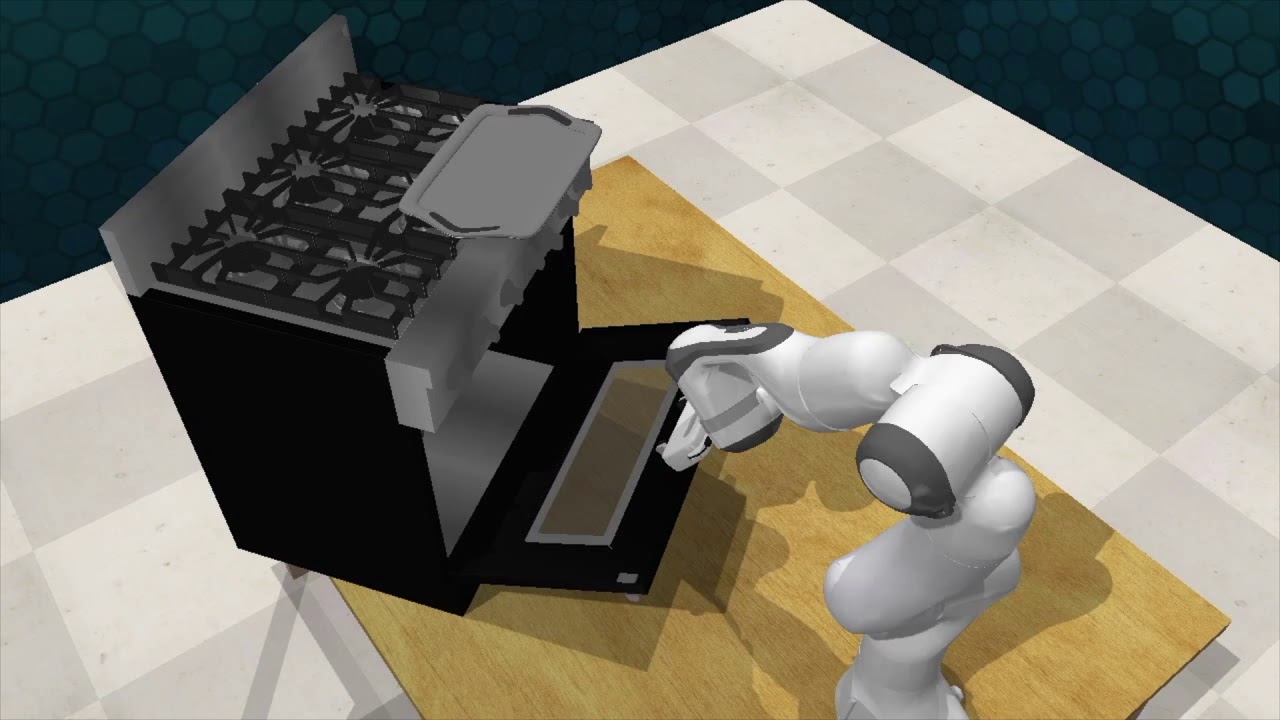

RLBench: The Robot Learning Benchmark & Learning EnvironmentIEEE Robotics and Automation Letters, 2020

RLBench: The Robot Learning Benchmark & Learning EnvironmentIEEE Robotics and Automation Letters, 2020 -

Sim-to-Real via Sim-to-Sim: Data-efficient Robotic Grasping via Randomized-to-Canonical Adaptation NetworksConference on Computer Vision and Pattern Recognition, 2019

Sim-to-Real via Sim-to-Sim: Data-efficient Robotic Grasping via Randomized-to-Canonical Adaptation NetworksConference on Computer Vision and Pattern Recognition, 2019 -

Transferring End-to-End Visuomotor Control from Simulation to Real World for a Multi-Stage TaskConference on Robot Learning, 2017

Transferring End-to-End Visuomotor Control from Simulation to Real World for a Multi-Stage TaskConference on Robot Learning, 2017